Reflection

Actual vs Intended

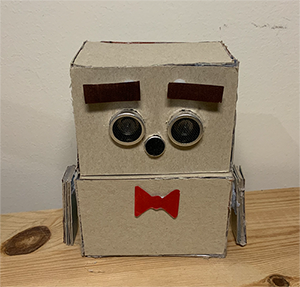

Overall, I feel that final product has followed the intended concept really closely and I am really happy

of how it turned out to be. The things that did not follow the intended concept was the portable features and the noise volume detection that I had to simulate.

I would have wanted Spud's body to be 3D printed to be more sturdy and smaller in size with all the wiring and parts to be hidden inside and not connected to the laptop.

Undoubtedly, it would be built better if it was done by a team of four. However, as it became an individual project,

I had to limit myself on how many features I could add for Spud, what I could implement and what I had to simulate.

Other Work in the Domain

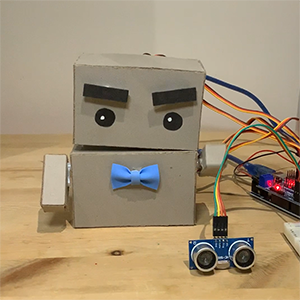

Robots normally talk or use different sounds to convey something and sometimes use a screen for facial expressions but I wanted to do something different with Spud.

The use of eyebrows and movement of the head and arms turned out to be a good choice in conveying emotion especially the eyebrows which was

something novel as it is rarely seen in robots and surprisingly quite expressive.

Team Domain & Studio Theme

I believe Spud has accurately upheld the team domain "Sassy Tech" and the studio theme of "designing for playful and open-ended

interaction approaches". Spud is sassy and playful in its own way by helping its user externalise their mood with the use of its eyebrows and body movement.

People can also interact with it when they get close the user or ask it to do tricks with voice commands.

Project Outcomes

Did Spud meet the project's desired outcomes?

1. Does Spud help the user to keep others away and stop others from being loud in the Alert mode?

Partially. With the testing done with participants and from the reactions of visitors of the exhibition, Spud's warning and stop facial expression makes it amusing and cute to look at so it does not work as intended.

However, the scared expression does work as the test participants felt bad when Spud was scared. The warning expression can be kept the same but the stop movement of Spud should be completely changed to be something more aggressive like the Helping Hand.

2. Does Spud help the user to socialize with others in the friendly mode?

Yes. From the testing done and the reactions of the visitors of the exhibition, Spud is very likeable and popular which would help the user in socialising with others.

3. Does Spud react correctly in different situations?

Partially. The distance sensor worked well to detect people walking near but the voice recognition for tricks was not accurate at times and the noise volume detection was simulated. A more accurate voice recognition tool should be used and the volume detection implemented.

4. Is Spud portable and able to be brought around by the user?

No. The final product of Spud is big with a wire connected to the laptop. Therefore, it is not portable. However, a smaller version of Spud was built as a ideal size to easily show people how Spud should actually look like.

5. Is the different facial expressions and body positions of Spud understandable by everyone?

Yes. From the user testing done, all the participants could clearly identify what Spud was trying to convey with different movement.